Turning Amazon Furniture Reviews into Actionable Insights

Explore the live dashboard →view dashboard

How it started

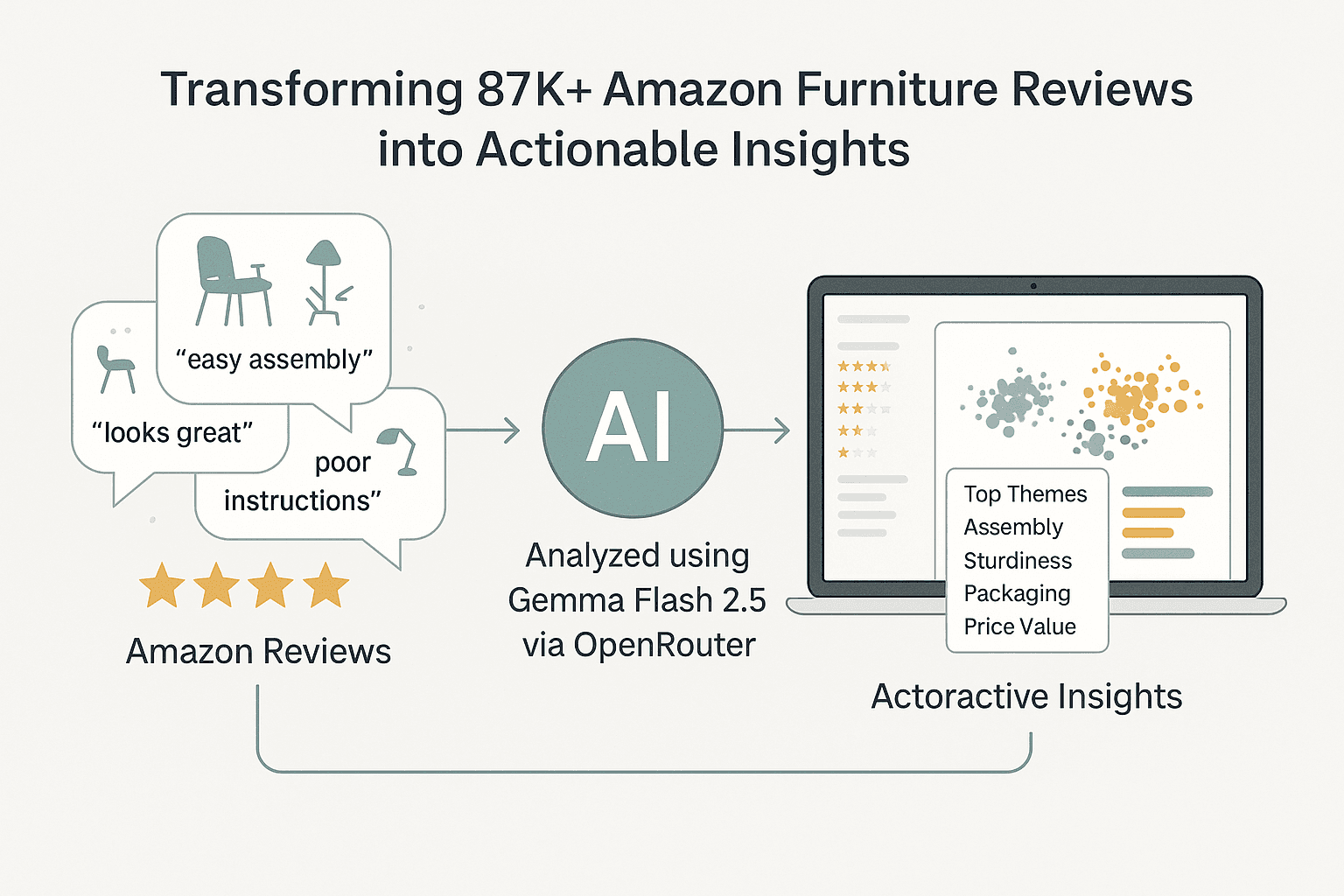

When I first looked at thousands of Amazon furniture reviews, I realized they held more than just opinions, they were mini research studies written by real customers. Comments about “easy assembly,” “missing screws,” or “beautiful design” revealed not just satisfaction but recurring patterns of experience.

That curiosity how to uncover meaningful insights from unstructured text, sparked this project.

The approach

I built an end-to-end AI pipeline that extracts, clusters, and visualizes insights at scale,powered by the Gemma Flash 2.5 model on OpenRouter.

LLM-powered extraction — Each review was distilled asynchronously using the Gemma Flash 2.5 model through OpenRouter APIs, returning structured spans for pain points, positives, themes, and purchase factors.

Concurrent orchestration — Leveraging Python’s asyncio, I processed reviews in parallel while checkpointing results every 1,000 batches to handle transient API or network errors efficiently.

Semantic canonicalization — Extracted phrases were embedded via a SentenceTransformer model and passed through two-stage clustering (tight → loose similarity) to merge near-duplicates like “easy to assemble” and “assembly was simple.”

Deeper review grouping — Dimensionality reduction (PCA → t-SNE) and KMeans clustering on 87K+ reviews revealed recurring product-experience patterns from durability concerns to aesthetic satisfaction.

Comparative insights — Using the same LLM, I contrasted 5-star vs 1-star reviews to uncover what actually differentiates top-rated products from poor performers.

The Streamlit experience

All these layers come together in an interactive Streamlit dashboard. Explore it here

The dashboard enables you to:

-

Filter reviews by rating band

-

Explore semantic clusters visually

-

Hover over extracted insights to reveal context

-

Compare high- vs low-rating product segments side-by-side

Hosted on Streamlit Cloud and styled with subtle WSU cues, the dashboard turns raw review text into visually-driven, decision-ready intelligence.

Key findings

Across 87,000+ reviews, several patterns emerged that reveal how customers think about furniture quality and value:

Ease of assembly and sturdiness were the strongest drivers of 5-star ratings, especially for self-assembly furniture.

Instruction clarity consistently influenced satisfaction customers rewarded well-written manuals almost as much as product durability.

Design, colour, and comfort added emotional appeal but were rarely decisive without solid quality and packaging.

Logistics pain points like late delivery, missing screws, or poor packaging often overshadowed otherwise good experiences.

Perceived value for price repeatedly appeared as a hidden success factor customers mentioned being happy when the product “looked more expensive than it was.”

Review length analysis showed that 1-star reviewers wrote 2× longer texts, usually blending frustration with detailed recounts useful signals for detecting systemic product flaws.

Seasonal sentiment shifts suggested slightly higher satisfaction around holiday seasons, possibly due to delivery prioritization or improved packaging during peak months.