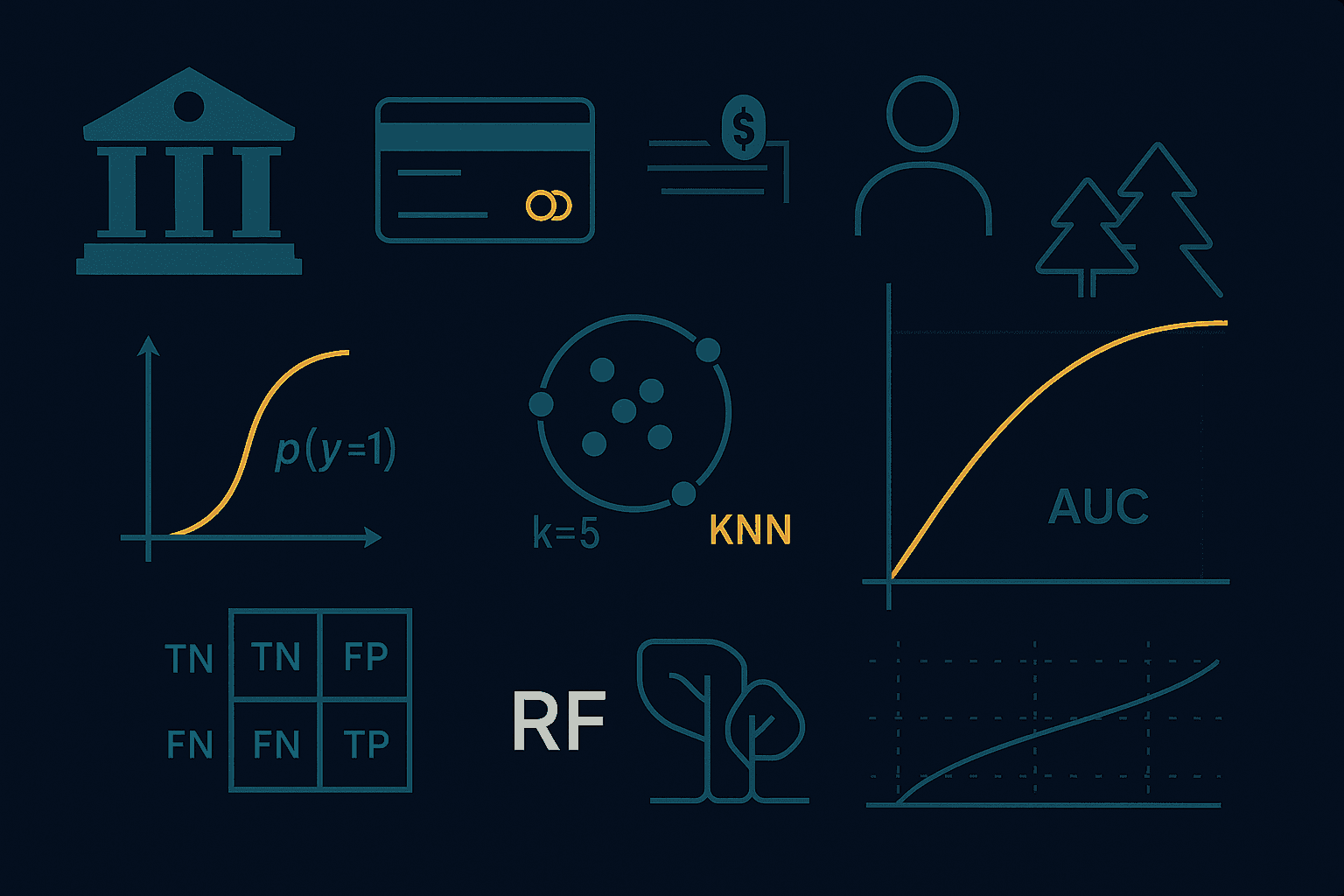

Predicting Term-Deposit Subscription (UCI Bank Marketing)

Goal:

Classify whether a customer will subscribe to a term deposit (y) using the UCI Bank Marketing dataset.

Data: 45,211 rows × 17 columns (original). Target is binary (y: yes/no). Split. Stratified 80/20 train–test.

Preprocessing.

Binary mapping for default, housing, loan, and y (yes→1, no→0)

Ordinal encoding for education and month

One-hot encoding (drop-first) for job, marital, contact, poutcome

Models.

Logistic Regression (statsmodels Logit)

KNN (k=5) with StandardScaler

Random Forest (n_estimators=100, max_depth=10, max_features='sqrt', min_samples_split=5, min_samples_leaf=3, random_state=42)

Evaluation (Test set).

To improve sensitivity to the minority “yes” class, I evaluated Logistic Regression and Random Forest at a decision threshold = 0.20 (KNN uses class predictions).

Random Forest (threshold 0.20)

Accuracy 0.88

Positive class (y=1): Precision 0.48, Recall 0.77, F1 0.59

Logistic Regression (threshold 0.20)

Accuracy 0.88

Positive class: Precision 0.47, Recall 0.62, F1 0.54

KNN (k=5)

Accuracy 0.89

Positive class: Precision 0.56, Recall 0.30, F1 0.39

Why Random Forest?

Although KNN achieved slightly higher accuracy, the business goal prioritizes catching subscribers (higher recall on y=1). Random Forest delivered the best recall (0.77) and the strongest F1 for the positive class, so it was selected.

Artifacts.

Confusion Matrices: generated for each model (see notebook)

ROC Curves: plotted to compare Logistic Regression, KNN, and Random Forest

What I learned / Next steps.

Threshold tuning meaningfully shifts precision–recall trade-offs for imbalanced targets.

- Address imbalance (class_weight='balanced', or SMOTE/undersampling),

- Tune RF hyperparameters,

- Add probability calibration,

- Provide model explanations (e.g., feature importances/SHAP).